Image: Ekkasit919/Getty Images

Deepfakes still have a bright future ahead of them, it would seem. It is still possible to thwart the recognition of deepfakes by even the most highly developed detectors, according to scientists at the University of San Diego. By inserting “adversarial examples” into each frame, artificial intelligence can be fooled. An alarming observation for scientists who are pushing to improve detection systems to better detect these faked videos.

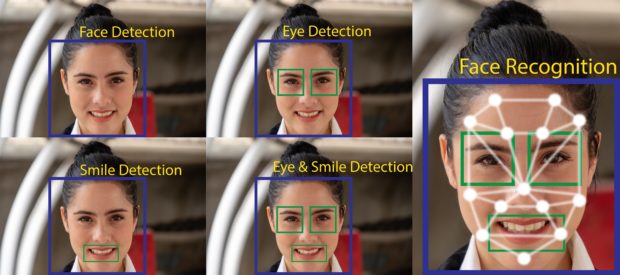

During the last WACV 2021 (Winter Conference on Applications of Computer Vision), which took place from January 5 to 9, scientists from the University of San Diego have demonstrated that deepfake detectors have a weak point. According to these professionals, by using “adversarial examples” in each shot of the video, artificial intelligence could make a mistake and designate a deepfake video as true. These “adversarial examples” are in fact slightly manipulated inputs that can cause the artificial intelligence to make mistakes. To recognize deepfakes, the detectors focus on facial features, especially eye movement like blinking, which is usually poorly reproduced in these fake videos.

This method can even be applied to videos that have been compressed, which until now has been able to remove these false elements, the American scientists said. Even without having access to the detector model, the deepfake creators who used these “adversarial examples” were able to thwart the vigilance of the most sophisticated detectors. This is the first time such actions have successfully attacked deepfake detectors, the scientists said.

Scientists are sounding an alarm and recommending improved training of the software to better detect these specific modifications and thus these new deepfakes: “To use these deepfake detectors in practice, we argue that it is essential to evaluate them against an adaptive adversary who is aware of these defenses and is intentionally trying to foil these defenses. We show that the current state of the art methods for deepfake detection can be easily bypassed if the adversary has complete or even partial knowledge of the detector,” the researchers wrote. NVG

RELATED STORIES:

A deepfake bot generated ‘nude’ pictures of over 100,000 women