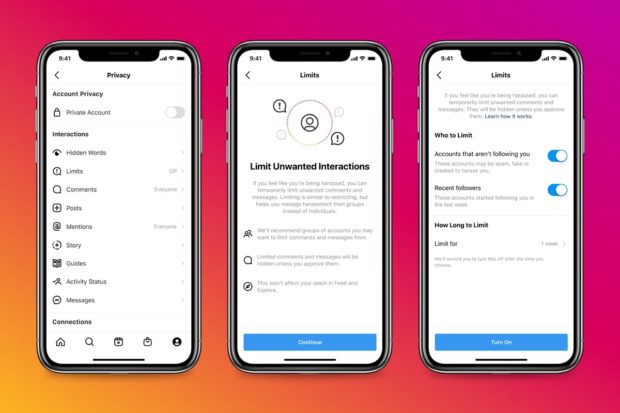

Users can choose how long the “Limits” function is activated. Image: courtesy of Instagram via ETX Daily Up

Instagram is not taking a break when it comes to enhancing its platform. The social network is continuing to unveil new features on a nearly daily basis. This time, the company is tackling cyberbullying by unveiling a new “Limits” feature and strengthening its warning policy. Will it be enough?

Faced with issues of cyberbullying on its platform, Instagram has already introduced several new features designed to better protect users, particularly young users.

Unveiled earlier this year in some regions, the “Hidden Words” feature, designed to allow users to automatically filter requests for direct messages containing words deemed abusive by a user, will now be deployed worldwide “by the end of this month,” said Instagram on Aug. 10.

‘Limits’ for abusive messages

The online platform is charging ahead in this regard by also proposing a new feature called “Limits.” This new option will automatically hide “comments and DM requests from people who do not follow you, or who only recently followed you,” said Instagram.

A way to avoid the torrents of hate that some unlucky users, often public figures, can sometimes experience, for instance being bombarded by hundreds of negative and even racist comments as was the case during Euro 2020 for three of the English players.

A handy new feature since it allows you to filter new comments without totally disabling them, which is obviously an important detail for creators, reports Instagram: “Creators also tell us they don’t want to switch off comments and messages completely; they still want to hear from their community and build those relationships. Limits allows you to hear from your long-standing followers, while limiting contact from people who might only be coming to your account to target you.”

To activate it, users will have to go to “Settings” and then “Privacy”. Instagram announced that this new feature is already available globally. The social network is also working on a way to detect “a spike in comments and DMs,” so they can prompt users to turn on Limits.

Warnings proven to be effective?

In a similar vein, the social network owned by Facebook was keen to point out that it was making its warnings “stronger” in order to discourage users from posting comments that may be offensive or seen as harassment. The warning tells the user that the comment in question may be hidden by some users even if it is published on the platform, while also indicating that the user’s account could be deleted if it violates the rules of the social network “multiple times.”

While these warnings may still seem quite mild for such offenses, Instagram says its policy is effective nonetheless: “in the last week we showed warnings about a million times per day on average to people when they were making comments that were potentially offensive. Of these about 50% of the times the comment was edited or deleted by the user based on these warnings,” they reported.

Instagram remains cautious, however, and acknowledges the enormous amount of work that still needs to be done on platforms to combat cyberbullying: “We know there’s more to do, including improving our systems to find and remove abusive content more quickly, and holding those who post it accountable. We also know that, while we’re committed to doing everything we can to fight hate on our platform, these problems are bigger than us.”

“We will continue to invest in organisations focused on racial justice and equity, and look forward to further partnership with industry, governments and NGOs to educate and help root out hate. This work remains unfinished, and we’ll continue to share updates on our progress,” it added. IB

RELATED STORIES:

Coming soon: New features for Twitter and Instagram

New child safety features for Google, YouTube