Mind-reading AI turns thoughts into text

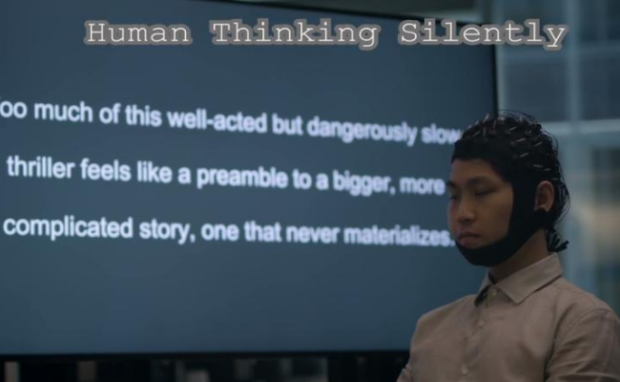

University of Technology Sydney (UTS) researchers developed a mind-reading AI program that turns thoughts into readable text. DeWave uses an EEG or electroencephalogram to decode brain waves. More importantly, it doesn’t require wearing implants or a tight-fitting cap, so that more people can wear it comfortably.

Technology has been helping disabled people reintegrate into daily life, from the humble wheelchair to the more advanced hearing aid. Nowadays, Japan enables its disabled persons to work as convenience store workers by piloting robots remotely. Soon, DeWave may help stroke and paralysis victims communicate with others.

This article will provide more details regarding the UTS mind-reading AI program. Later, I will discuss similar machine learning tools that interpret brain activity.

How does the mind-reading AI work?

UTS experts subjected their machine learning algorithm through rigorous training to match words with brain waves. Eventually, they turned into entries in DeWave’s “codebook.”

“It is the first to incorporate discrete encoding techniques in the brain-to-text translation process, introducing an innovative approach to neural decoding,” computer scientist Chin-Teng Lin said. “The integration with large language models is also opening new frontiers in neuroscience and AI.”

Lin and his team used trained language models, combining the BERT and GPT systems. Then, they tested them on existing datasets of people with eye tracking and brain activity recorded while reading text.

That method helped their mind-reading program translate brain wave patterns into words. Next, they trained DeWave further with an open-source large language model that turns words into sentences.

ScienceAlert said the Ai tool performed best when translating verbs. In contrast, it typically translated nouns as pairs of words with the same meaning instead of the exact translations.

For example, it may interpret “the author” as “the man.” “We think this is because when the brain processes these words, semantically similar words might produce similar brain wave patterns,” explained first author Yiqun Duan.

“Despite the challenges, our model yields meaningful results, aligning keywords and forming similar sentence structures,” he added. However, the researchers admitted their method requires further refinement.

You may also like: AI eye test detects brain injuries in minutes

After all, receiving brain signals through a cap instead of implants reduces its accuracy. DeWave only achieved 40% accuracy based on one of two sets of metrics in the study.

“The translation of thoughts directly from the brain is a valuable yet challenging endeavor that warrants significant continued efforts,” the team stated.

“Given the rapid advancement of Large Language Models, similar encoding methods that bridge brain activity with natural language deserve increased attention.”

Other mind-reading AI

Researchers from Osaka University created an artificial intelligence that can read minds and turn them into photos using Stable Diffusion and DALL-E 2. https://t.co/TibB4x61sB

— Inquirer (@inquirerdotnet) March 24, 2023

Other countries are also developing mind-reading artificial intelligence software. For example, Osaka University researchers created one that turns thoughts into photos.

Yu Takagi led his Osaka AI team that conducted the study. The University of Minnesota provided brain scans from four subjects who viewed 10,000 photos.

Then, the AI team trained Stable Diffusion and DALL-E to link images with specific brain activity. Other researchers have tried this approach, but the images appeared blurry.

In response, the researchers added captions to the images. For example, they named the image of a clock tower “clock tower.”

The text-to-image apps would associate brain activity with certain pictures. As a result, the Osaka AI program needed less time and data to “learn” how to match pictures and brain waves.

Takagi tested the system on the Minnesota samples and found the AI could recreate the images with 80% accuracy.

Then, he and his team confirmed the results using brain scans from the same people looking at different images. Remarkably, the second test had similar results.

Note that they tested the AI system with only the same four people. Consequently, Takagi would have to retrain the program to work on others.

You may also like: Brain chip lets people control home devices

It would likely take several years before this technology becomes widely available. Yet, Iris Groen, a neuroscientist at the University of Amsterdam, remarked:

“The accuracy of this new method is impressive. These diffusion models have [an] unprecedented ability to generate realistic images.”

As a result, they might open new opportunities for studying the brain. Meanwhile, systems neuroscientist Shinji Nishimoto saw applications for other industries.

Conclusion

Sydney researchers developed a mind-reading AI that turns brain waves into text. Moreover, it achieves this feat without implanting electrodes into a person’s scalp.

It requires further research and development before it becomes publicly available. Nevertheless, researchers hope it could help disabled persons communicate more effectively.

Learn more about the DeWave program on its arXiv webpage. Moreover, learn more about the latest digital tips and trends at Inquirer Tech.