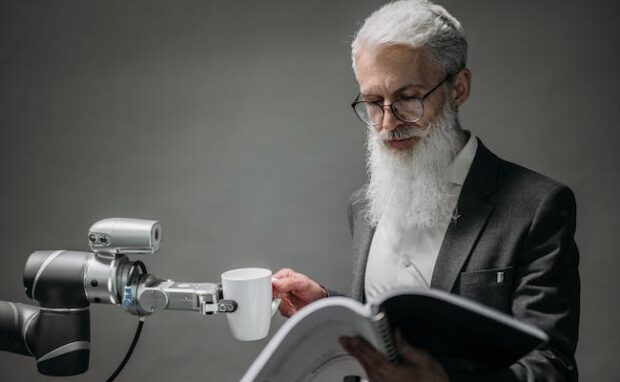

Dialects help robots gain trust

Germany’s University of Potsdam discovered that people prefer robots that speak their dialects. Specifically, they learned that the Berlin dialect works best for most Germans because they were familiar with it. As a result, tech companies may use this insight to develop more approachable robots.

It may seem obvious that we’d trust people who speak “our language,” but this principle applies to machines. More countries want to deploy robots in all industries, but they must breach the language barrier first. Now that we have specific research that proves this hypothesis, we could start building robots that fulfill this requirement.

This article will discuss why people prefer robots that speak in their local dialects. Later, I will share other technologies that assist speech.

How do dialects help robots?

Oregon State University defines dialects as “versions of a single language that are mutually intelligible, but that differ in systematic ways from each other.”

For example, the Philippines’ national language is Tagalog or Filipino, which most people in the capital Metro Manila speak. However, head to a province like Cebu, where the people speak the Cebuano dialect.

Our digital age brings more robots into our industries, so our interactions will increase soon. The study’s lead author, Katharina Kühne, thought language may affect those interactions.

“Surprisingly, people have mixed feelings about robots speaking in a dialect. Some like it, while others prefer standard language,” she said.

“This made us think: maybe it’s not just the robot, but also the people involved that shape these preferences,” Kühne added. German researchers surveyed 120 residents from Berlin and Brandenburg online to see how dialects affect robot acceptance.

They watched online videos of a male robot speaking in standard German or the Berlin dialect. Interesting Engineering says they often associate it with the working class due to its informal, friendly connotations.

More importantly, non-natives fluent in the language usually understand this variation. Participants evaluated the robot’s trustworthiness and competence.

You may also like: Robot arm eco-friendly way of dismantling ships

Then, they completed a demographic questionnaire that asked their age, gender, duration of residence, Berlin dialect proficiency, and frequency of usage.

The results suggest that robots that seemed competent also looked trustworthy. Consequently, respondents favored the robot speaking the Berlin dialect.

“If you’re good at speaking a dialect, you’re more likely to trust a robot that talks the same way. It seems people trust the robot more because they find a similarity,” lead author Katharina Kühne says.

Other speech AI applications

We could have robots speaking our language, but what about AI using our voices? I discussed in my previous content a project that could make that possible: OpenVoice.

The Canadian startup MyShell collaborated with MIT and Tsinghua University researchers to create the program. Nowadays, we have other voice cloning AI, but it sets itself apart with its unique features.

First, it has Accurate Tone Color Cloning, enabling it to clone the reference tone color and generate speech in multiple languages and accents.

Second, Flexible Voice Style Control lets users modify specific characteristics of a voice sample, such as emotion, accent, pauses, and intonation. Third, Zero-shot Cross-lingual Voice Cloning lets OpenVoice generate speech in languages not included in its multi-lingual training dataset.

It can also generate recitations that convey sadness, happiness, and other emotions. Moreover, the program can adjust voices to talk in different accents, like British and Indian.

Similar programs can only generate messages in English and a few other languages. However, OpenVoice AI can integrate several languages into a single passage.

Another expert from Cornell University invented AI glasses that help people with speech impediments speak again. Ruidong Zhang calls it the EchoSpeech, according to this previous Inquirer Tech article.

The current version of EchoSpeech enables users to communicate with others via smartphone. It uses an AI-powered sonar system to read the user’s lips.

You may also like: Robots could make people lazier: study

Sonar involves sending sound waves to bounce them against surrounding objects. The transmitter receives the returning sound to map the environment.

The device matches those sound waves with a Smart Computer Interfaces for Future Interactions (SciFi) Lab algorithm. This artificial intelligence analyzes echo profiles with 95% accuracy.

“For people who cannot vocalize sound, this silent speech technology could be an excellent input for a voice synthesizer. It could give patients their voices back,” Zhang said.

Conclusion

German researchers discovered that humans trust robots when they speak their local dialects. This is an important feature to implement if we want everyone to benefit from AI and bots.

For example, we could have ChatGPT speak in Tagalog to convince more Filipinos to use it. Believe it or not, you could ask that chatbot to speak to you in your language, too!

Learn more about the impact of local dialects on robot trustworthiness at Frontiers. Also, check the latest digital tips and trends at Inquirer Tech.