The 4th USAID conference discussed approaches to AI governance

On September 24, 2024, USAID held its 4th conference, in collaboration with the National Economic Development Agency and the Philippine Competition Commission.

The event, “The Genie Is Out of The Bottle: Approaches to AI Governance,” shared examples of how governments use artificial intelligence.

READ: AI for gender equality

Specifically, it had experts from the United States and the United Kingdom explaining how their nations promote AI innovation while protecting workers.

AI governance in the US and the UK

The first speaker was Daniela Medina, a digital policy specialist at the US Department of Commerce. She started by explaining the United States overarching AI governance objective:

“Our goal is to develop a flexible and adaptable governance structure to account for the rapid development of AI…” she said.

“… and allow enough flexibility to adapt to the changes to the technology and different AI applications that will be developed in the future,” Medina continued. “As well as assure that we’re not stifling innovation and allowing inter-operability between our policies and that of our international partners.”

Then, Medina elaborated on the United States AI governance principles and explained how various agencies promote them:

- Safety and Security

- Innovation and Competition

- Supporting American Workers

- Advancing Equity and Civil Rights

- Consumer Protection

- Privacy

- Managing Risks of Federal Use of AI

- International Leadership

The digital policy specialist stated that the US takes outputs from multilateral bodies as reference points for AI governance approaches.

These include the Organization for Economic Cooperation and Development (OECD) and the United Nations.

Afterward, Medina turned over the stage to Micheal Mudd, a digital trade economist and an accredited trainer to the British Standards Institution.

He summarized the European Union’s approach to AI regulations:

- Prohibiting applications, such as emotional recognition in workplaces and schools, social credit scoring, and manipulation of peoples’ behavior

- Including rules on “high-risk” systems, such as the electoral system and healthcare

- Imposing compliance and transparency requirements on General Purpose AI

AI governance and the Philippines

More importantly, Mudd explained what AI means for the Philippine BPO industry. He said that its staff turnover is 30% to 40% due to the following:

- Stressful work environment

- Limited career advancement

- Low pay

- Repetitive tasks

The digital trade economist confirmed that companies are deploying more intelligent AI agents to cover repetitive questions.

Consequently, agents could focus more on complex ones.

Fortunately, online business growth is boosting the BPO industry, which may lead to lower turnovers.

Learn more about AI’s impact on the Filipino call center industry in this Inquirer Tech article.

Later, the AI governance event proceeded with the panel discussion. Its panelists were the two speakers and NEDA Undersecretary Krystal Uy and DICT Director Maria Victoria Castro.

Also, Dr. Roberto Galang, the dean of John Gokongwei School of Management of the Ateneo de Manila University moderated the discussion.

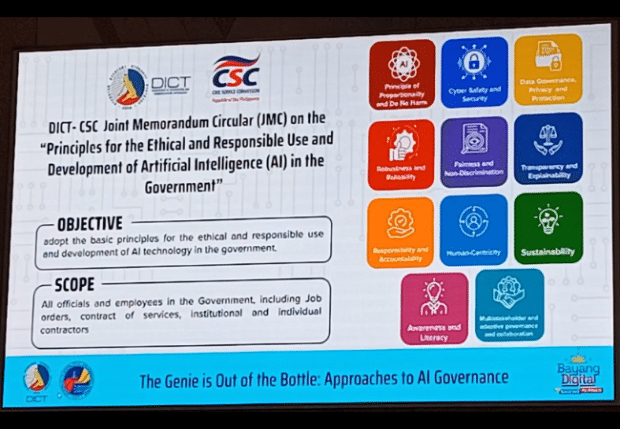

Castro shared the Philippine government’s plans to draft a joint memorandum circular regarding its use of artificial intelligence.

Its title is “Principles for the Ethical and Responsible Use and Development of Artificial Intelligence AI in the Government.”

She explained the DICT based the memorandum on the following international AI principles:

- The World Health Organization’s Ethics and Governance on AI for Health

- ASEAN Guide on AI Governance and Ethics

- Recommendation on the Ethics of Artificial Intelligence

- UN General Assembly Resolution on Seizing the Opportunities of Safe, Secure and Trustworthy AI Systems for Sustainable Development

Then, Castro revealed the draft memorandum circular’s contents:

- Objective: Adopt the basic principles for the ethical and responsible use and development of AI technology in the government.

- Scope: All officials and employees in the government, including job orders, contracts of services, and institutional and individual contractors.

“We are putting emphasis on the authority of humans on AI. It should be the humans that will make the decisions, not the AI,” director Castro explained.