Facebook takes down over 1.5B fake accounts in six months

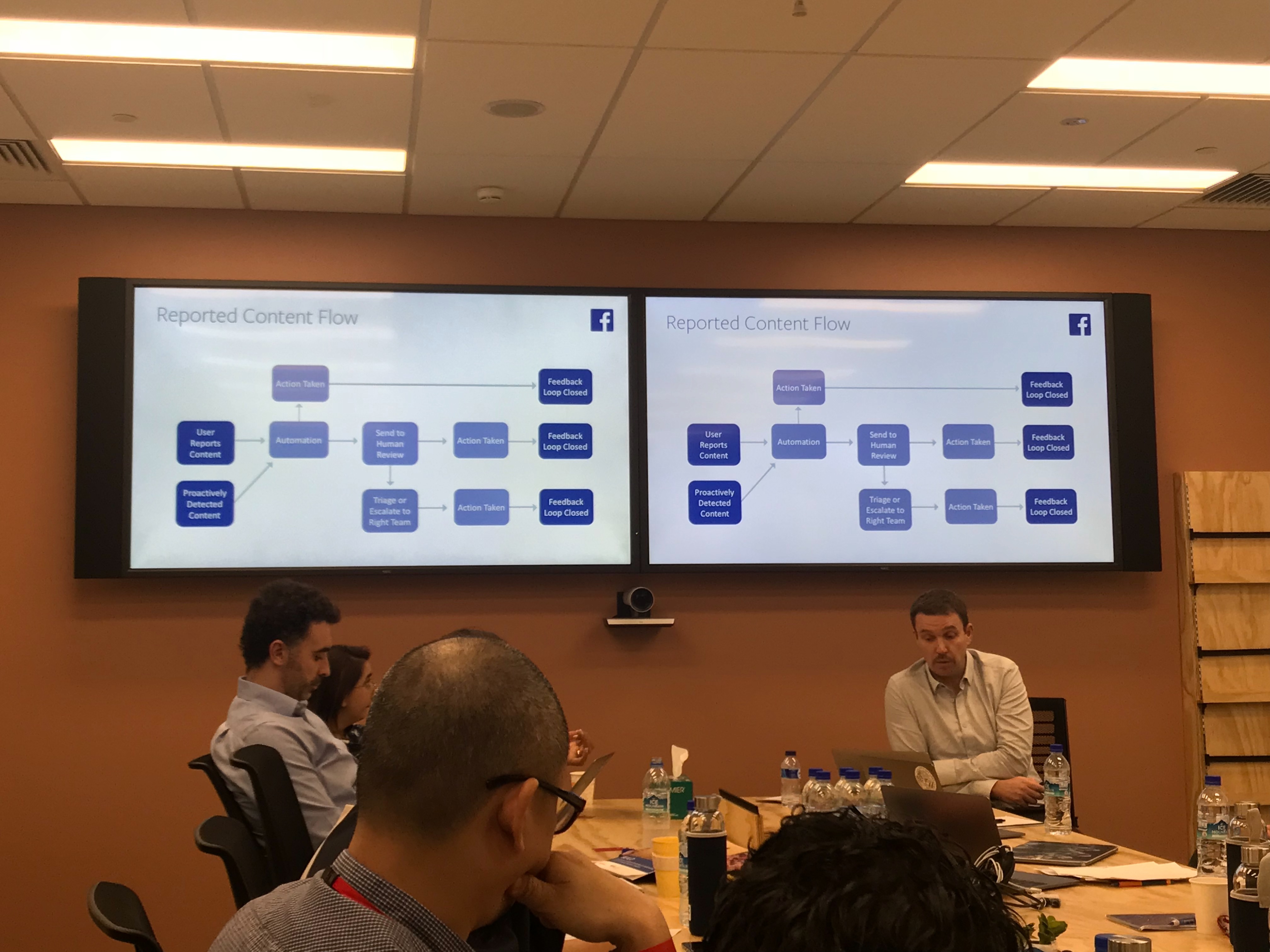

Facebook officials explain its policies during roundtable discussion with the media in Singapore, Nov.13. (Photo by Maila Ager/INQUIIRER.net)

For six months alone, Facebook had taken down over 1.5 billion fake accounts.

The social media giant disclosed this in its second and latest Community Standards Enforcement Report posted on its website on Thursday.

READ: Enforcing our community standards

Facebook said the second report shows the company’s enforcement efforts on policies against adult nudity and sexual activity, fake accounts, hate speech, spam, terrorist propaganda, and violence and graphic content, from April 2018 to September 2018.

“Since our last report, the amount of hate speech we detect proactively before anyone reports it, has more than doubled from 24% to 52%,” it said.

Based on the report, the 24 percent detection rate was recorded from October to December last year while the 52 percent rate was recorded from July to September this year.

“The majority of posts that we take down for hate speech are posts that we’ve found before anyone reported them to us,” Facebook said.

“This is incredibly important work and we continue to invest heavily where our work is in the early stages — and to improve our performance in less widely used languages.”

Meanwhile, Facebook’s proactive detection rate for violence and graphic content increased by 25 percentage— from 72% in the last quarter of 2017 to 97% in the third quarter of this year.

“We also took down more fake accounts in Q2 and Q3 than in previous quarters, 800 million and 754 million respectively,” the company noted.

“Most of these fake accounts were the result of commercially motivated spam attacks trying to create fake accounts in bulk,” it added.

Facebook said the prevalence of fake accounts remained steady at 3% to 4% of monthly active users because they were able to remove most of them within minutes of registration.

In the third quarter of 2018, Facebook said they also took action on 15.4 million pieces of violent and graphic content.

“This included removing content, putting a warning screen over it, disabling the offending account and/or escalating content to law enforcement,” it said.

“This is more than 10 times the amount we took action on in Q4 2017. This increase was due to continued improvements in our technology that allows us to automatically apply the same action on extremely similar or identical content,” it further said.

The report also includes two new categories of data — bullying and harassment and child nudity and sexual exploitation of children.

The company though explained that bullying and harassment “tend to be personal and context-specific” so they need a person to report this behavior before Facebook can identify or remove it.

“This results in a lower proactive detection rate than other types of violations,” it said.

“In the last quarter, we took action on 2.1 million pieces of content that violated our policies for bullying and harassment — removing 15% of it before it was reported. We proactively discovered this content while searching for other types of violations,” Facebook said.

RELATED STORIES:

Facebook shuts 583-M fake accounts