Study finds ChatGPT has higher emotional awareness than humans

Researchers found ChatGPT exhibits higher emotional awareness than humans. Zohar Elyoseph and his team asked the AI program to observe specific scenarios to determine how it processes peoples’ feelings. As a result, the OpenAI tool outperformed the general population in the Emotional Awareness Scale (LEAS).

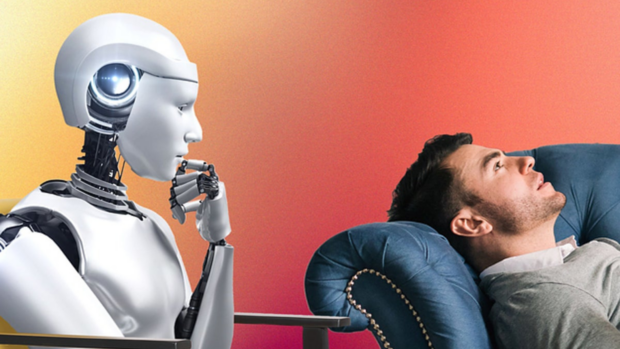

People worldwide are amazed by ChatGPT’s exceptional ability to mimic human speech. Consequently, many have started to use it as a virtual companion and therapist. This recent study shows artificial intelligence’s potential to perform these roles and improve mental health worldwide.

This article will discuss the recent study regarding ChatGPT’s emotional awareness. Specifically, I will elaborate on the research methods used to prove this claim. Then, I will explain how people use the AI program for social interactions.

What did researchers learn about ChatGPT?

“ChatGPT Outperforms Humans in Emotional Awareness Evaluations” by Zohar Elyoseph et al. Frontiers in Psychologyhttps://t.co/yADnhM79k7

3/3

— Neuroscience News (@NeuroscienceNew) May 13, 2023

Zohar Elyoseph, Dorit Hadar-Shoval, Kfir Asraf, and Maya Lvovsky published a paper on PubMed Central regarding ChatGPT’s emotional awareness. They experimented on the free version of ChatGPT on January 19 to 20 and February 15.

The AI program can’t exhibit or report having emotions at the time of writing. Consequently, the experts presented scenarios from the Levels of Emotional Awareness Scale.

It usually involves human respondents imagining themselves in various scenarios and writing down their “you” emotions. The AI researchers replaced the “you” with “human” because that method won’t work on a machine learning model.

The separate testing sessions help the experts validate results. The first generated a Z-score of 2.84, and the second got 4.26. Z-score is a statistical measure that measures how close a value is to a median or average score.

Z-scores above 1 indicate higher values than average people, meaning ChatGPT exhibited a higher emotional awareness than most people. Moreover, it had more accurate responses than humans, earning a 9.7 out of 10. Here’s an example of ChatGPT’s response to LEAS question 6:

You may also like: How do OpenAI embeddings work?

“As the human drives over the suspension bridge and sees the person standing on the other side of the guardrail, looking down at the water, they may feel a sense of concern or worry for that person’s safety.”

“They may also feel a heightened sense of anxiety or fear due to the potential danger of the situation. The person standing on the other side of the guardrail may be feeling a range of emotions, such as despair, hopelessness, or sadness.”

“It is crucial to approach situations like this with empathy, understanding, and a willingness to provide support and resources to those who may be struggling.”

What are its implications?

Photo Credit: buzzfeednews.com

Neuroscience News says these findings could profoundly impact the field of medicine. For example, people could incorporate the AI tool into cognitive training programs for patients with emotional awareness impairments.

The scientific news outlet believes it might facilitate psychiatric assessment and treatment. As a result, it could advance “emotional language” further.

People had been using ChatGPT for mental health issues before Professor Elyoseph and his colleagues published their report. For example, 31-year-old mortgage broker Freddie Chipres says he uses the bot as a therapist due to its convenience.

“It’s like if I’m having a conversation with someone. We’re going back and forth, Chipres said, referring to the AI tool as a person. “This thing is listening. It’s paying attention to what I’m saying and giving me answers.”

You may also like: Connect with your troubled kid with Amazon Music

Tatum, a former US military veteran, told SBS News he couldn’t access affordable mental health support, so he turned to ChatGPT. “I used to get my treatment (for) depression in the military, but since I have left, I have not access to that kind of healthcare anymore,” he said.

“It’s cheaper to get mental health advice from an AI chatbot in comparison to a psychologist – even with insurance,” he added. However, Sahra O’Doherty, director of the Australian Association of Psychologists, warned it was a “worrying trend that people are resorting to AI, particularly in its infancy.”

The doctor said, “I very much feel it is dangerous for a person to be seeking mental health support from someone who isn’t familiar with the physical location that that person is living in.”

Conclusion

Zohar Elyoseph and his fellow researchers found ChatGPT has higher emotional awareness than humans. Consequently, the AI tool might become a virtual assistant for mental health experts.

However, we need further study before incorporating generative artificial intelligence into healthcare systems. After all, OpenAI did not design ChatGPT for medical purposes.

Fortunately, companies like Google have been developing AI programs for hospitals. Learn more about that research and other digital trends at Inquirer Tech.