AI deepfakes and their deeper impacts

Hang around Twitter lately, and you’ll notice major buzz regarding Taylor Swift’s AI deepfakes. They feature the young pop icon performing questionable acts with Kansas City Chiefs fans. In response, US lawmakers swore to write and implement laws against fabricated AI pictures to protect Swift and others from digital fakery.

Most would scoff and see a celebrity getting so much special attention that people write laws about her. However, a closer look reveals a more troubling future concerning the rampant use of artificial intelligence programs. One where you or people you know might feature in compromising scenarios for everyone to see.

Artificial intelligence is here to stay, so we must understand it to reap its benefits and mitigate its risks. Specifically, let’s focus on the potential effects of AI deepfakes and what we should do to protect information and privacy.

What are the future impacts of AI deepfakes?

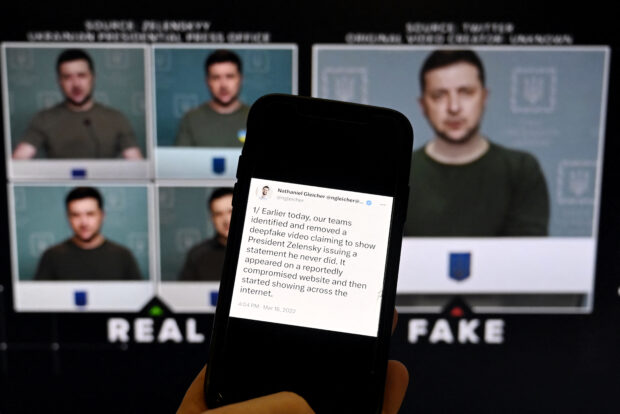

AI deepfakes use artificial intelligence to create hyperrealistic photos, voices, videos, and other media. However, they recently became famous for featuring people in embarrassing or compromising made-up situations.

We’ve always had such falsified images online, but they were harder to make. You needed to know Adobe Photoshop or similar image-altering programs to create them.

That is why fewer images spread roughly 10 years ago. Nowadays, the rise of ChatGPT inspired many to adopt large language models into creating media.

You can find many websites that will edit any image however you wish. Write a few sentences, and the programs will do the work. Arguably, they require no specialized skills from users.

That is why we have more people creating falsified images featuring celebrities. Consequently, misinformation and disinformation are the most pressing negative consequences of deepfakes.

You could put any random famous person in these programs and put them into any situation you like. Believe it or not, it was easy to spot AI deepfakes months ago because they had telltale signs like missing or extra digits.

That isn’t a problem anymore because these programs have significantly improved in a few months. Also, those upgrades may encourage others to use them against regular people.

You may also like: Google CEO warns about AI deepfake videos

Specifically, they could use AI for identity theft. I explained how to identify AI images in my past guide, but those tips only worked in 2023.

That can be devastating, especially if you’re looking for a job. More importantly, Pope Francis warns that it may ruin the public’s trust in the truth. I discussed his statement in this article.

If you can’t determine which is real or not, you might dismiss any news report altogether. Some may stick to their long-standing beliefs without ever adapting to new information.

What are some of the popular AI deepfakes?

The boys in Brooklyn could only hope for this level of drip pic.twitter.com/MiqkcLQ8Bd

— Nikita S (@singareddynm) March 25, 2023

Ironically, the Pope has some of the most infamous AI deepfakes. On March 2023, fabricated images of the Holy See leader donning an expensive jacket, shades, and a golden cross necklace.

In response, the Vatican had to debunk rumors that Pope Francis was rocking the streets in designer clothes. You can read more about it on my other article.

Another celebrity sparked debates regarding AI deepfakes’ impact on politics. In the same month, images of former US President Donald Trump’s arrest circulated the Web, such as those from Ars Technica.

You may also like: How to spot AI deepfake scams

The images caused a firestorm on Twitter, with Democrats denouncing the former head of state. In contrast, some Republicans felt outraged by the false image.

Of course, the most popular one now is Taylor Swift’s explicit AI images involving the Kansas City Chiefs fans. It is so impactful that some US states proposed laws against false AI content.

What can we do against AI deepfakes?

The most recent action involves Missouri and its “Taylor Swift Act.” FOX 2 says Missouri State Rep. Adam Schwardon introduced it as HB 2573.

It seeks to address unauthorized disclosures of individuals’ likenesses by allowing anyone affected to raise civil actions. “These images can cause irrevocable emotional, financial, and reputational harm,” said Schwardon.

“The worst part is that women are disproportionately impacted by these deepfakes. These fake images can be just as crushing, harmful, and destructive as the real thing.”

“There’s already enough bad in this world, and as the father of two daughters, I want to ensure that no one should have to fear this kind of assault,” he added.

Soon, other countries will likely implement similar laws to anticipate such risks. In the meantime, everyone should expand their media literacy.

You may also like: Nicki Minaj wants to delete viral AI deepfake video

We should learn where to get credible sources so we don’t fall prey to provocative falsehoods. Whenever we see shocking news or content, we must pause and check sources to verify them.

There is no easy way to fight AI deepfakes. We have AI detectors, but those have quickly become obsolete as deepfake technology advances faster.

Banning such tools might also become counterintuitive as there are so many online. Also, rooting out their creators is next to impossible because many tools don’t require personal accounts.

Global conversation

Taylor Swift’s AI deepfakes have sparked a global conversation about AI images and their impacts. Contrary to popular belief, this issue isn’t only about a celebrity.

This is about you and your loved ones staying safe against others who might use your likenesses in AI pictures. Fortunately, you’re already taking the first step in keeping yourself safe by reading this article.

Understanding the latest artificial intelligence trends will help you avoid their risks and reap their benefits. Learn more at Inquirer Tech.

Frequently asked questions about AI deepfakes

What are AI deepfakes?

Status Labs defines deepfakes as “synthetic media in which a person in an existing image, video, or recording is replaced with someone else’s likeness. It is a portmanteau of the words “deep learning” and “fake,” suggesting it uses machine learning and AI to create content.

Why are deepfakes major threats?

Everyone has access to free online tools for creating deepfake images and videos. Their spread may have started with celebrities, but they may soon involve regular people like you and me. As a result, we might see more of our friends and family appearing in fabricated scenarios that may compromise our identities and reputations.

How do you make AI deepfakes?

Some of the most popular tools for AI deepfakes include Stable Diffusion and Midjourney. You could make an AI image featuring a celebrity using only text prompts. However, you could provide a sample if you want to put a regular person instead. Read my articles about Stable Diffusion and Midjourney to learn more about them.